When doing my game development there is often a task or two that are just a little too computationally intensive for a smooth framerate. And I put my framerate targets very ambitious: typically 120Hz for proper operation on an iPad PRO. At 120Hz, there is only 8.33ms to do everything: rendering, simulation, ai, physics, etc. So the larger computations that threaten to exceed this, need to be moved off the main thread.

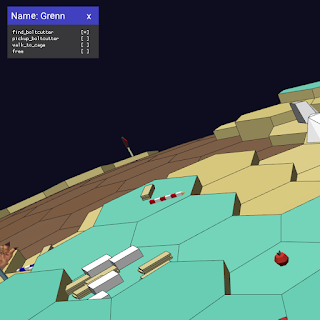

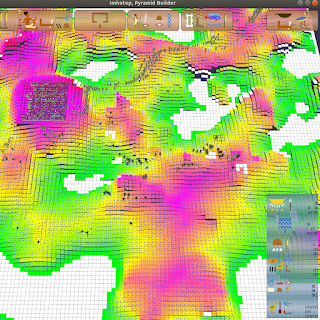

Examples off things that I have computed on their own threads, in my previous projects include: AI action planning, iso surface generation to simulate deformable terrain, crowd flow and path finding.

The biggest hurdle to take when doing multi threaded code, is to avoid race conditions. If two threads are writing to something, which write will persist? Or if a thread reads and another writes, will the reader see the old or the new value? Tricky stuff.

I've found a neat trick to make this whole MT programming thing a lot more manageable. And frankly, after repeatedly using it, I've come to regard it as some sort of magic bullet. In this blog post, I will explain my approach in the hope that it will be useful to other (game) developers.

So the mechanism of choice for me can be summed up in one sentence: concentrate all the synchronization + semaphores + condition variables in just one place: a thread-safe work queue.

This thread-safe queue is then used to set up a producer / consumer system for units of work. The producers live on the main thread and spec well defined pieces of work that need to be performed. Those work specs are put in the queue.

The consumers of these jobs live on worker threads, with one consumer for each thread, and often one thread per CPU core. The job is consumed and the worker thread goes to work, thereby performing the outsourced service that the producer on the main thread did not want to do itself.

Note that the task-queue consumers are the actual service-providers, and that the task-queue producers are the clients of these services that want the computation out-sourced to another thread.

To communicate back the results of the computations, I use nothing fancy. All I do is have the worker write a boolean in memory to signal that the specific work was done. This is not protected by any construct, because there is a well defined order in which things happen: The worker (and only the worker) writes the boolean. The client on the main thread (and only that client) reads the boolean to see if work is done. I do this polling once every simulation frame. Every 'entity' in the simulation that has work outstanding, checks the boolean for completion once per frame, and if it is set, it can safely, use the results that have been stored in main memory. If a client just misses the completion, no biggie! The next simulation frame, it will be picked up.

Note that the magic bullet comes with one big drawback, which may or may not be a big deal in your personal case: You lose determinism. But frankly, deterministic code is elusive anyway. For instance, there is hidden precision in the FPU registers that may be set randomly, so in practice deterministic floating point code is not possible anyway.

The actual implementation of a thread safe queue is beyond the scope of this article, but it involves one mutex, and two condition variables. One condvar to signal that the queue is not empty (wakes up consumers) and one condvar to signal that the queue is not full (wakes up producers.) Of course the queue depth needs to be large enough so that it is never full, because a full queue would temporarily freeze your main thread.

Finally, I want to stress that this approach does not absolve you from being careful. You still need to make sure that a thread is not overwriting nilly willy in the main memory. But the fact that you can safely communicate the work that is req'd and the work that is completed, is at least half the battle.