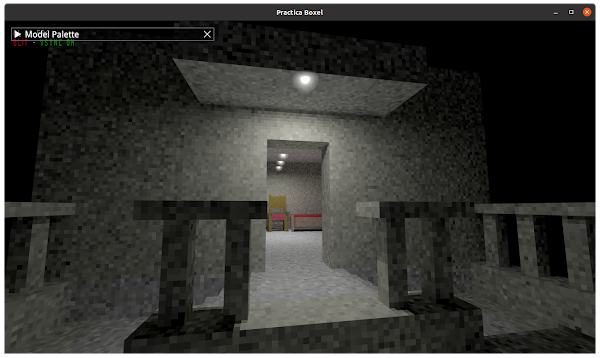

I am making a real-time Photon Mapper, called Practica Boxel.

Photon Mapping is an algorithm that computes Global Illumination for a scene. This means that unlike traditional real-time rendering techniques, it does not merely compute the direct illumination, but it also considers light that is diffusely reflected from an object, onto another object. This is an incredibly expensive technique, since there are near infinite paths that a photon can take from a lightsource, into your eye. Hence, most GI images are either very slow to compute, or very noisy.

The Photon Mapping algorithm is O(N) in the number of photons (linear.) Or traditionally, even worse than that, as you cannot store a photon in a kD tree in O(1). But my underlying photon storage does have O(1), so in this case, the total algorithm stays at O(N) and thus scales linearly.

Henrik Wann Jensen, the author of the Photon Mapping algorithm, mentions that you can keep the photon count independent from the number of light sources. If you increase the number of light sources in your scene, you do not necessarily need to increase the number of photons that you shoot. So far so good.

But still, I would like to prioritize my light sources, so that the sources that influence the scene illumination the most, shoot the most photons. I need to allocate a photon budget for each light source.

And this brings me to an interesting problem to solve. If my camera roams freely in the world, I need to pick out which lightsources are closest to the camera. These sources are more likely to affect the illumination of the parts in the scene that are close to the camera.

My photon mapper has an additional requirement: for fast indexing, I need my light-source count to be a power of 2. So if there are 9 sources in the world, I actually need to feed my computational kernel 16 sources, some of which are copies. A source that is represented twice can simply be scaled down in brightness, so that both copies combine to the same power output.

It would be beneficial to have the light source furthest away from the original 9, be the one that is not duplicated, and shooting fewer photons (only half of the duplicated ones.)

But I don't have to stop at merely duplication. I could just create 256 light-sources out of the original 9, and do this in such a way that a very nearby light (e.g. a head-mounted light) could get many copies. Let's say 20 of them. And have the street lamp, all the way at the far end of the street be represented by just one. Once fired, those photons have to be scaled of course: even though the street lamp only shoots a few, those that it does shoot, have higher power, whereas our 20-fold duplicated head-lamp shoots much more faint photons. However, the count is really high, so that the noise up-close to the camera is lower.

So here we have my interesting problem:

- Given N light sources L1..LN...

- With distances to the camera D0..DN...

- How to map them onto M (which is 2ᵏ) virtual sources LV1..LVM...

- So that if source Q is F times further from the camera than source R...

- It is mapped roughly F times less often onto the LV set than source R.

- k Can be chosen freely, up to, let's say, 10.

This seems a non trivial problem to solve, so I am leaning towards a simplified version of it, where I classify each original source L as:

- FARTHEST

- FAR

- MED

- NEAR

And subsequently assign them to 1,2,3 or 4 virtual sources. After adding up the assignments, round up to a power of 2, and distribute the remainder T to the T nearest sources. Scale down the power of each virtual source depending on the representation count.

I'll report back once I have implemented this, and evaluated how it behaves under a roaming camera in a larger scene.

UPDATE

In the end, I went for a slightly different approach: allocate (k * distance) emitters for each lightsource. I do a binary search for a value of k that results in a total emitter count of 128. Done.