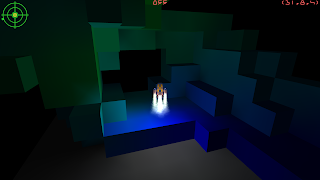

So I have been developing a new game where a rocket engine Globally Illuminatesits environment. It started out as an AVX2 implementation, but since then I have been pursuing other acceleration techniques. For instance, can I run the photon mapping algorithm on the GPU instead? Here are some initial results of the different approaches.

Some conclusions I got from this: OpenCL has not been worth the effort. It takes 4 CPU cores to get to a speed that still lies significantly below the speed of my hand optimized AVX2 implementation that runs on a single core.

Apple's OpenCL seems to be in a bad shape. I could not get it to run on GPU, and running on CPU yielded a 3.5 times slower result compared to Linux.

The GLSL implementation seems promising. A dependency on ES3.1 or OpenGL 3.2 is less of a barrier than the AVX2 dependency. With some temporal caching, and reducing photon counts, it should be able to reach solid 60fps on integrated GPUs, and maybe even 60fps on future mobile CPUs.